DARPA wants to tackle ‘deepfakes’ with semantic forensics

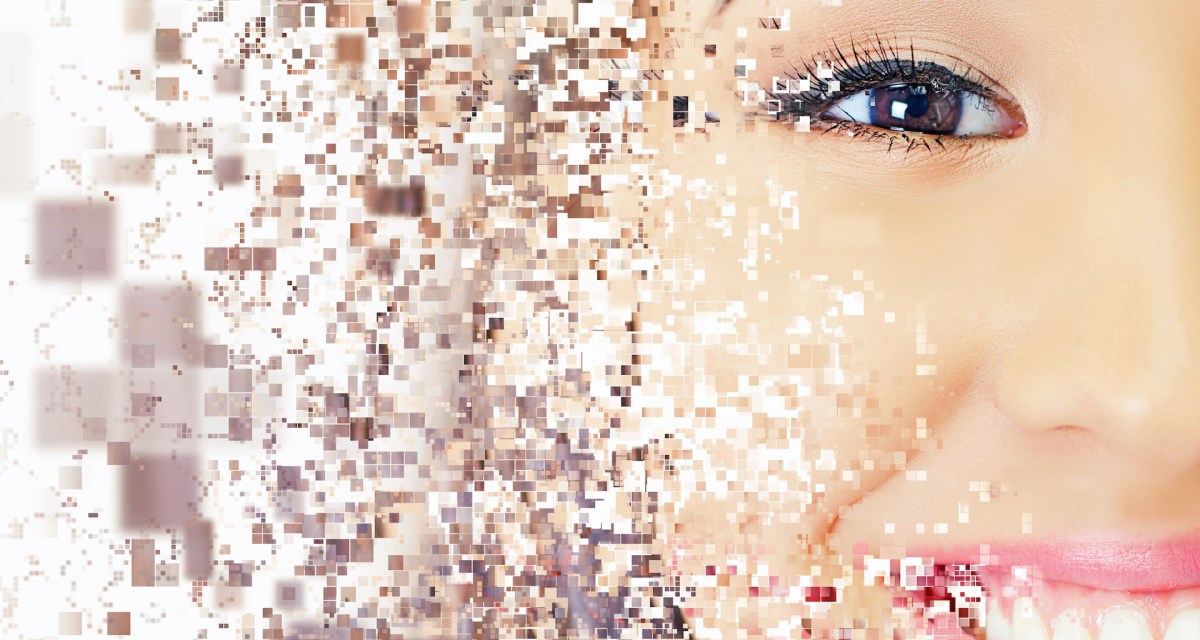

When it comes to detecting whether an image or video is fake, it’s the little mistakes that matter, and to help with the sleuthing, the Defense Advanced Research Projects Agency wants to improve what it calls “semantic forensics.”

The agency announced this week that it plans to hold a proposers day on Aug. 28 to give more information on an anticipated Semantic Forensics (SemaFor) Broad Agency Announcement. It’s the latest expression of DARPA’s interest in countering the chaos-inducing potential of “deepfakes” — the practice of using artificial intelligence to manipulate audio, video, text or photo files.

The SemaFor program, DARPA says, will explore ways to get around some of the weaknesses of current deepfake detection tools. The statistical detection techniques used in the past have been successful to date, but those tools won’t always have the upper hand.

“Detection techniques that rely on statistical fingerprints can often be fooled with limited additional resources,” the proposers day announcement states. “However, existing automated media generation and manipulation algorithms are heavily reliant on purely data driven approaches and are prone to making semantic errors.”

In practice, it means that artificially generated faces, for example, exhibit “semantic inconsistencies” like “mismatched earrings,” DARPA says. The most troublesome deepfakes are those that make real people look like they’re saying or doing things they’ve never actually done.

“These semantic failures provide an opportunity for defenders to gain an asymmetric advantage,” the agency says.

The draft schedule for the proposers day includes briefings by a number of DARPA officials and time for participant Q&A. Would be attendees must register in advance.

This announcement isn’t DARPA’s first stab at the deepfake challenge. The agency has had a Media Forensics (MediFor) team doing this kind of work since 2016. Its goal, the team’s webpage states, is “to level the digital imagery playing field, which currently favors the manipulator, by developing technologies for the automated assessment of the integrity of an image or video and integrating these in an end-to-end media forensics platform.”