Senate bill would require platforms to get consumer consent before their data is used on AI-model training

Online platforms would need to get consent from consumers before using their data to train AI models under new legislation from a pair of Senate Democrats.

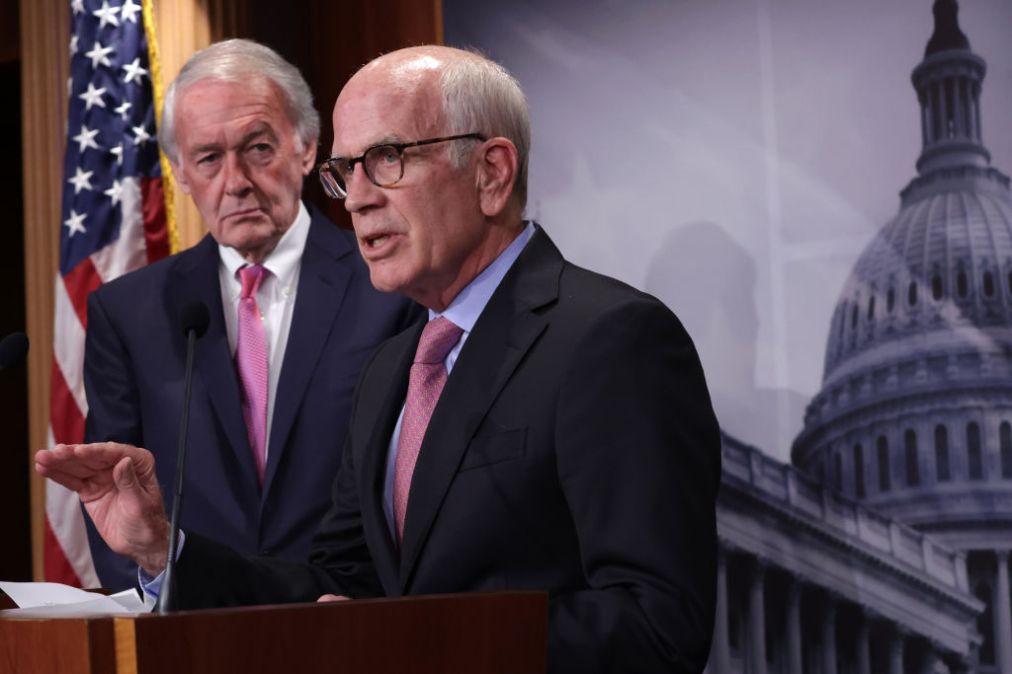

If a company fails to obtain that express informed consent from consumers prior to AI model training, it would be deemed a deceptive or unfair practice and result in enforcement action from the Federal Trade Commission, under the Artificial Intelligence Consumer Opt-In, Notification Standards, and Ethical Norms for Training (AI CONSENT) Act, introduced Wednesday by Sens. Peter Welch, D-Vt., and Ben Ray Luján, D-N.M.

“The AI CONSENT Act gives a commonsense directive to artificial intelligence innovators: get the express consent of the public before using their private, personal data to train your AI models,” Welch said in a statement. “This legislation will help strengthen consumer protections and give Americans the power to determine how their data is used by online platforms. We cannot allow the public to be caught in the crossfire of a data arms race, which is why these privacy protections are so crucial.”

Added Luján: “Personally identifiable information should not be used to train AI models without consent. The use of personal data by online platforms already pose great risks to our communities, and artificial intelligence increases the potential for misuse.”

The bill seeks to create standards for disclosures, including a requirement that platforms provide instructions to consumers for how they can affirm or rescind their consent. The option to grant or revoke consent should be made available “at any time through an accessible and easily navigable mechanism,” the bill states; and the selection to withhold or reverse consent must be “at least as prominent as the option to accept” while taking “the same number of steps or fewer as the option to accept.”

The legislation includes various provisions to regulate how the disclosures are presented by platforms, including specifications on visual effects such as font and type size, the placement of a disclosure on a platform, and how to ensure that the “brevity, accessibility and clarity” of disclosures ensure that they can be “understood by a reasonable person.”

Within a year of the proposed legislation’s adoption, the FTC would be required to produce a report for the Senate Commerce, Science and Technology and House Energy and Commerce committees on how technically feasible it would be to “de-identify” data as the pace of AI developments quicken. The agency would also be charged in the report with assessing measures that platforms could pursue to de-identify user data.

The legislation, which has the backing of the National Consumers League and the consumer rights advocacy nonprofit Public Citizen, is one in a series of AI-related bills coming out of the Senate this year, following 2023 efforts in the chamber on everything from labels and disclosures on AI products to certification processes for critical-impact AI systems.

The Biden administration, meanwhile, has shown a particular interest in open foundation models, while FTC Chair Lina Khan earlier this year announced an agency probe into AI models that unlawfully collect data that jeopardizes fair competition.

“The drive to refine your algorithm cannot come at the expense of people’s privacy or security, and privileged access to customers’ data cannot be used to undermine competition,” Khan said during the FTC Tech Summit in January. “We similarly recognize the ways that consumer protection and competition enforcement are deeply connected with privacy violations fueling market power, and market power, in turn, enabling firms to violate consumer protection laws.”