Commerce launches AI safety consortium with more than 200 stakeholders

The Department of Commerce announced a new consortium for AI safety that has participation from more than 200 companies and organizations, as the Biden administration continues its push to develop guardrails for the technology.

The consortium, which was launched Thursday, is part of the National Institute of Standards and Technology’s AI Safety Institute and will contribute to actions outlined in President Joe Biden’s October AI executive order, the department said in an announcement. That will include the creation of “guidelines for red-teaming, capability evaluations, risk management, safety and security, and watermarking synthetic content,” the agency said.

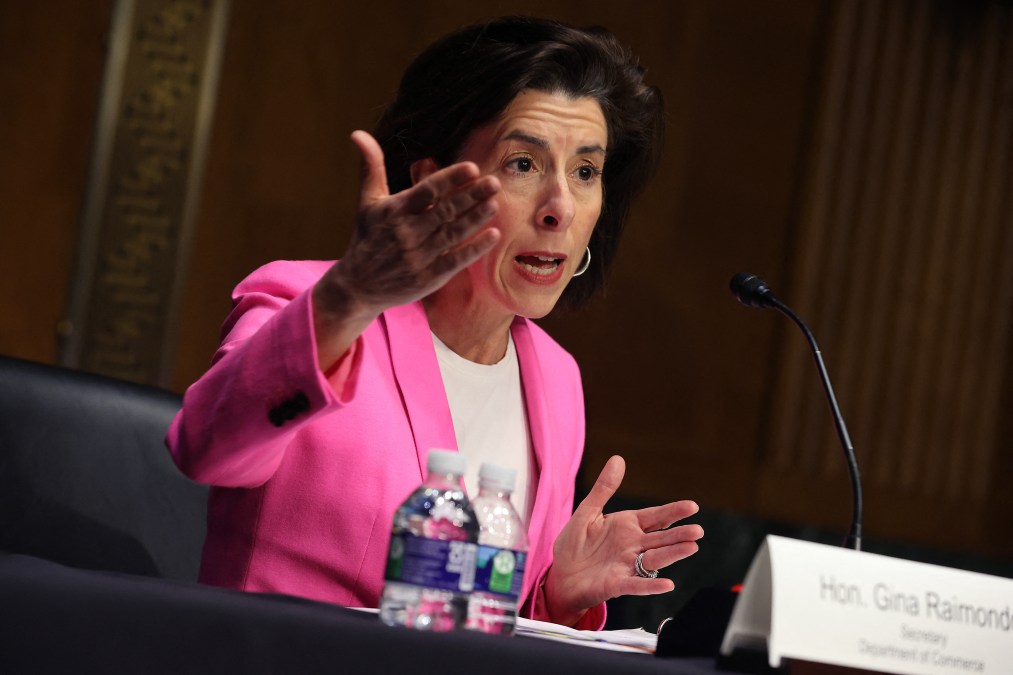

“The job of the consortia is to ensure that the AI Safety Institute’s research and testing is fully integrated with the broad community,” Secretary of Commerce Gina Raimondo said at a press conference announcing the consortium. The work that the safety institute is doing can’t be “done in a bubble separate from industry and what’s happening in the real world,” she added.

Raimondo also highlighted the range of participants in the consortium, calling it “the largest collection of frontline AI developers, users, researchers, and interested groups in the world.”

The consortium’s participants are companies, academic institutions, unions, nonprofits, and other organizations. They include entities such as Amazon, IBM, Apple, OpenAI, Anthropic, Massachusetts Institute of Technology, and AFL-CIO Technology Institute (which is listed as a provisional member).

The announcement comes after the safety institute officially got its first leaders. On Wednesday, the Department of Commerce announced Elizabeth Kelly would lead the institute as its director and named Elham Tabassi to serve as chief technology officer. The institute was established last year at the direction of the administration.

After the Thursday press conference, Tabassi told reporters that as the department makes progress on the actions outlined in Biden’s AI order, they are looking to the consortium and institute to “continue to give a long-lasting approach” to those actions.

Participants applauded the announcement, lauding it as a positive step toward responsible AI.

“The new AI Safety Institute will play a critical role in ensuring that artificial intelligence made in the United States will be used responsibly and in ways people can trust,” Arvind Krishna, IBM’s chairman and chief executive officer, said in a statement.

John Brennan, Scale AI’s public sector general manager, said in a statement that the company “applauds the Administration and its Executive Order on AI for recognizing that test & evaluation and red teaming are the best ways to ensure that AI is safe, secure, and trustworthy.”

Meanwhile, David Zapolsky, Amazon’s senior vice president of global public policy and general counsel, said in a blog that the company is working with NIST in the consortium “to establish a new measurement science that will enable the identification of proven, scalable, and interoperable measurements and methodologies to promote development of trustworthy AI and its responsible use.”